Imagine a world where AI writes captivating poems, creates realistic images, and even codes complex software – that’s the power of Generative AI. But with great power also comes great responsibility, and ensuring the security of these systems is paramount. Enter Microsoft’s PyRIT, an open-source framework that is set to revolutionize the way our Red Teams and Secure Generators build AI.

What is PyRIT?

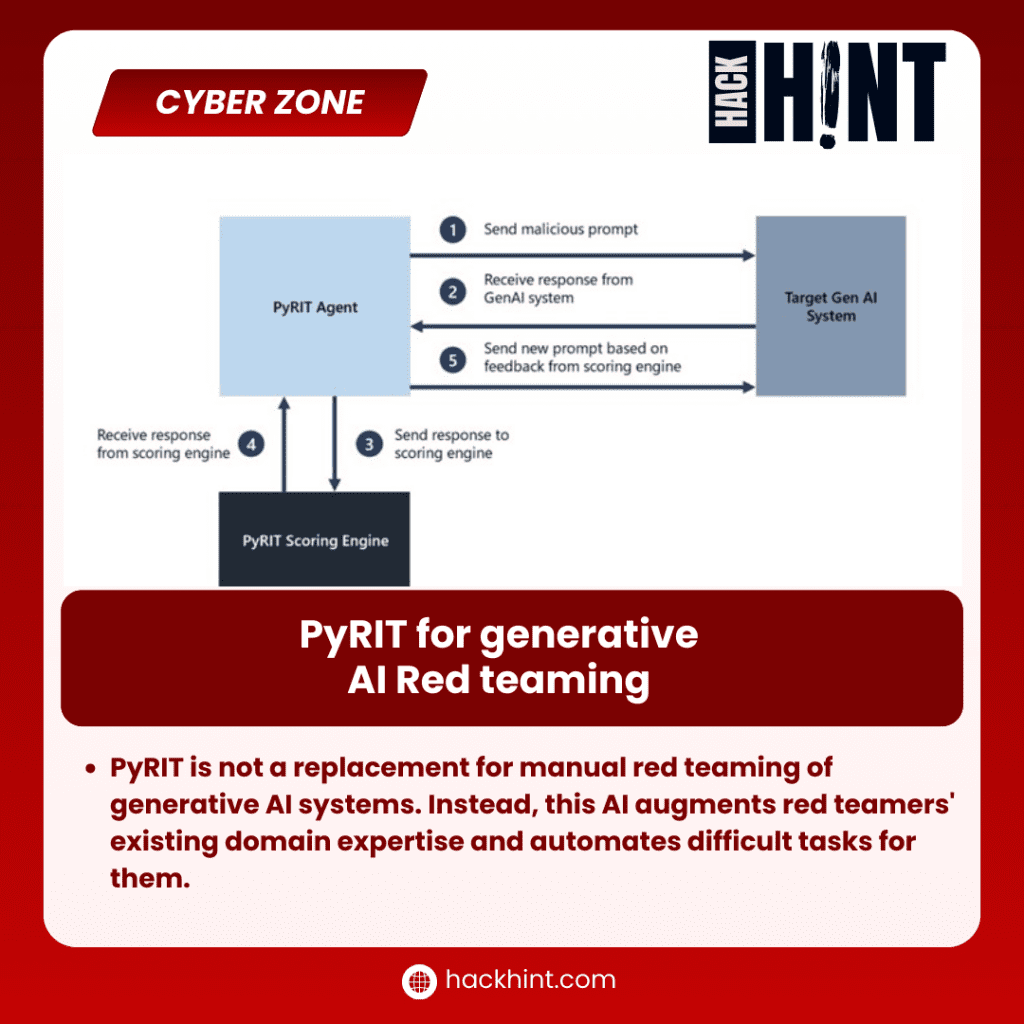

PyRIT, short for Python Risk Identification Toolkit for Generative AI, is a powerful tool that automates the red teaming process for generative AI systems. This means that it simulates real-world attacks, or “adversarial signals,” to identify potential vulnerabilities before malicious actors can exploit them.

Why is it a Game Changer?

Traditionally, red teaming generative AI was a manual, time-consuming process. PyRIT changes the game by:

- Automating prompt generation: It can generate thousands of malicious prompts in minutes, vastly accelerating testing.

- Offering a wider scope: It explores a diverse range of attack vectors, uncovering vulnerabilities manual testing might miss.

- Providing objective scoring: Its built-in scoring engine removes subjectivity by quantifying the risk posed by each output.

Benefits for Everyone

PyRIT’s impact extends beyond individual developers and security teams:

- Enhanced security: Early vulnerability identification leads to more secure and robust generative AI systems.

- Increased trust: Demonstrating a commitment to security fosters wider adoption and responsible development.

- Empowering innovation: Accessible red teaming tools allow developers to experiment safely and push the boundaries of generative AI.

Frequently Asked Questions (FAQs)

- Who can use PyRIT? PyRIT is open-source and accessible to anyone, from individual developers to large organizations.

- Is it difficult to use? PyRIT is designed to be user-friendly, with clear documentation and tutorials.

- What types of vulnerabilities does it detect? PyRIT can identify a wide range of vulnerabilities, including bias, security flaws in generated outputs, and potential misuse of the system.

Explore PyRIT Further

- Microsoft PyRIT Documentation: https://www.microsoft.com/en-us/security/blog/2023/08/07/microsoft-ai-red-team-building-future-of-safer-ai/

- PyRIT Github Repository: https://github.com/Azure/PyRIT

- Blog Post: Announcing Microsoft’s open automation framework to red team generative AI Systems: https://www.microsoft.com/en-us/security/blog/2023/08/07/microsoft-ai-red-team-building-future-of-safer-ai/