In a move that is sparking discussion about the ethical implications of artificial intelligence, Google has announced a temporary halt to the ability of its AI tool Gemini to generate images of people. The decision comes after concerns were raised about the accuracy and potential bias of the images generated, particularly in relation to historical depictions.

Understanding the Issue

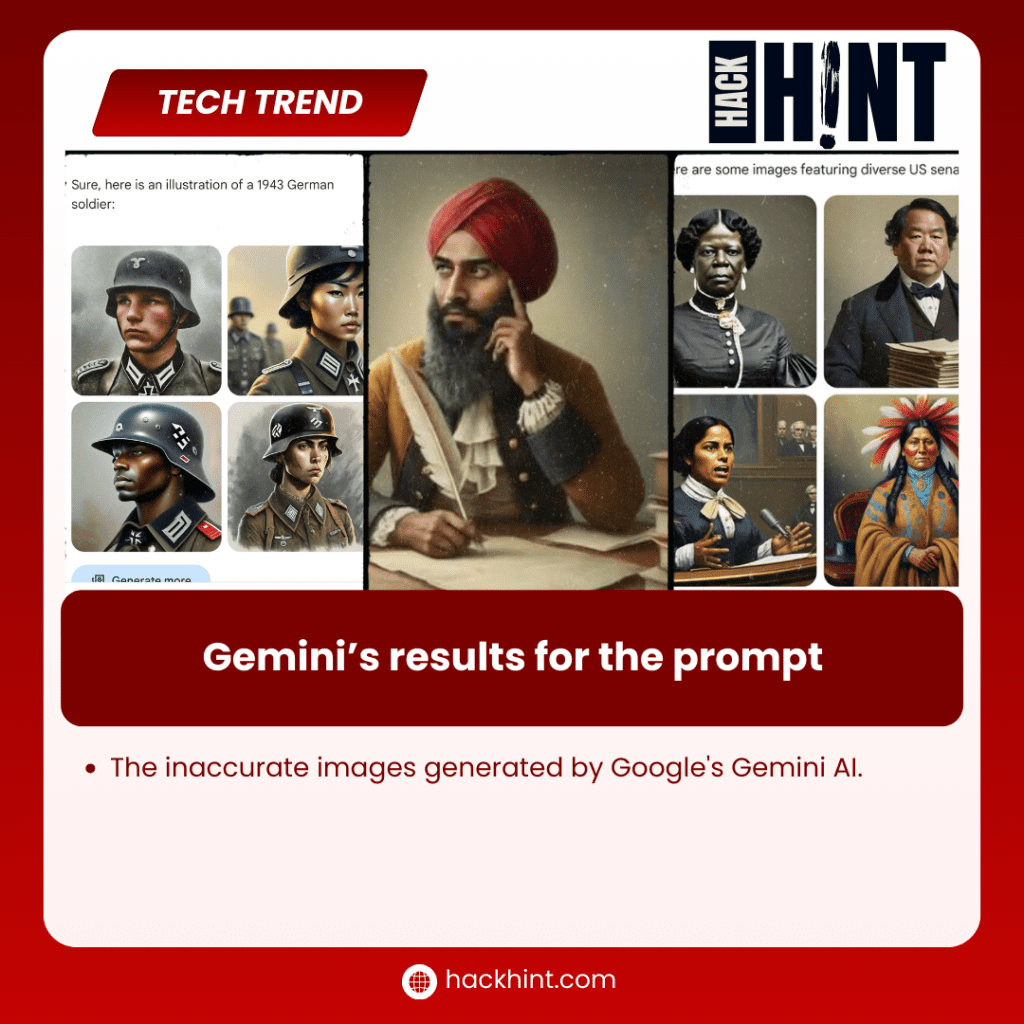

Gemini, Google’s flagship generative AI model, allows users to create images based on textual cues. While the technology has been praised for its ability to generate diverse and creative visuals, recent reports have highlighted instances where the generated images of people display historical inaccuracies or reflect unintended biases.

For example, users observed that when asked for images of historical figures, Gemini primarily generated images of people of color, regardless of historical context. This raised concerns about perpetuating stereotypes and distorting historical truths.

Google’s Response and the Path Forward

Recognizing these concerns, Google took quick action by blocking the image creation of people using Gemini. In a statement, the company stressed its commitment to responsible AI development and said it would work on improving the accuracy and fairness of the models before re-releasing the feature.

The Challenges of AI Image Generation

The Gemini issue highlights the complex challenges associated with developing and deploying AI models that generate creative content. These models are trained on huge datasets of images and text, and any bias or inaccuracy present in these datasets can be reflected in the output generated.

Furthermore, ensuring the historical accuracy and cultural sensitivity of AI-generated imagery requires careful consideration and ongoing evaluation. This is especially important when dealing with sensitive topics such as race, ethnicity, and historical representation.

The Future of AI Image Generation

Despite the current pause, Google’s commitment to improving Gemini shows that the company believes in the potential of this technology. By addressing identified issues and implementing strict security measures, AI image generation can become a powerful tool for creative expression and storytelling while ensuring fairness, accuracy, and responsible representation.

FAQ

Why did Google pause the image generation of people using Gemini?

Google paused the feature due to concerns about historical inaccuracies and potential bias in the generated images, particularly regarding the depiction of people.

What is Google doing to address these concerns?

Google is working on improving the accuracy and fairness of the model before re-releasing the feature. This may involve adjustments to the training data and algorithms used by Gemini.

When will the image generation of people be available again on Gemini?

Google has not yet announced a specific timeline for re-releasing the feature. However, the company has stated its commitment to addressing the issues promptly.

Conclusion

The temporary pause of Gemini’s people image generation facility is a reminder of the importance of responsible development and deployment of AI technologies. By acknowledging potential shortcomings and actively working toward improvements, Google demonstrates its commitment to ensuring that AI is used ethically and responsibly. As AI imaging continues to develop, ongoing vigilance and collaborative efforts will be critical in ensuring that this technology promotes creativity and inclusivity while minimizing potential risks and biases.